As enterprises increasingly adopt large language models (LLMs), a key challenge emerges how to safely and reliably connect AI systems with enterprise data and platforms. Databases, network platforms, and operational systems contain valuable information, but exposing them directly to LLMs introduces concerns around governance, observability, and correctness.

To address this, we implemented an architecture based on the Model Context Protocol (MCP) that enables AI agents to interact with enterprise systems through well-defined, controlled tools rather than unrestricted access.

This blog outlines how MCP can be applied in real-world enterprise scenarios using two use cases:

- MCP integration with Oracle Autonomous Transaction Processing (ATP)

- MCP integration with Cato Networks [external source]

The objective is to demonstrate how MCP enables structured and predictable AI interactions across different enterprise domains.

What is the Model Context Protocol (MCP)?

The Model Context Protocol (MCP) is a standardized approach that allows AI agents to interact with external systems through explicit tool interfaces.

Instead of giving an LLM unrestricted access to data sources, MCP enforces a clear separation between:

- Reasoning – performed by the AI agent

- Execution – performed by tools exposed by backend systems

In an MCP-based design, the AI agent is responsible for understanding the user intent and deciding which tools to use. The actual execution of operations such as database queries or metric retrieval is handled by backend services that expose these capabilities as tools. This makes MCP particularly suitable for enterprise environments where control and predictability are essential.

Using MCP, an agent:

- Discovers what capabilities are available

- Can select appropriate tools

- Invokes those tools with structured inputs

- Receives structured outputs for further reasoning or presentation

This design makes MCP particularly suitable for enterprise environments, where control, transparency, and safety are critical.

Why MCP Works Well in Enterprise Architectures

MCP introduces several advantages when integrating AI into enterprise systems:

- Controlled access: Agents can only use predefined tools

- Clear governance: Business rules and constraints are enforced at the tool layer

- Observability: Tool usage can be logged and audited

- Modularity: New tools can be added without changing the agent logic

- Vendor flexibility: The same pattern applies across databases, APIs, and platforms

These properties make MCP a strong foundation for building AI-driven enterprise applications.

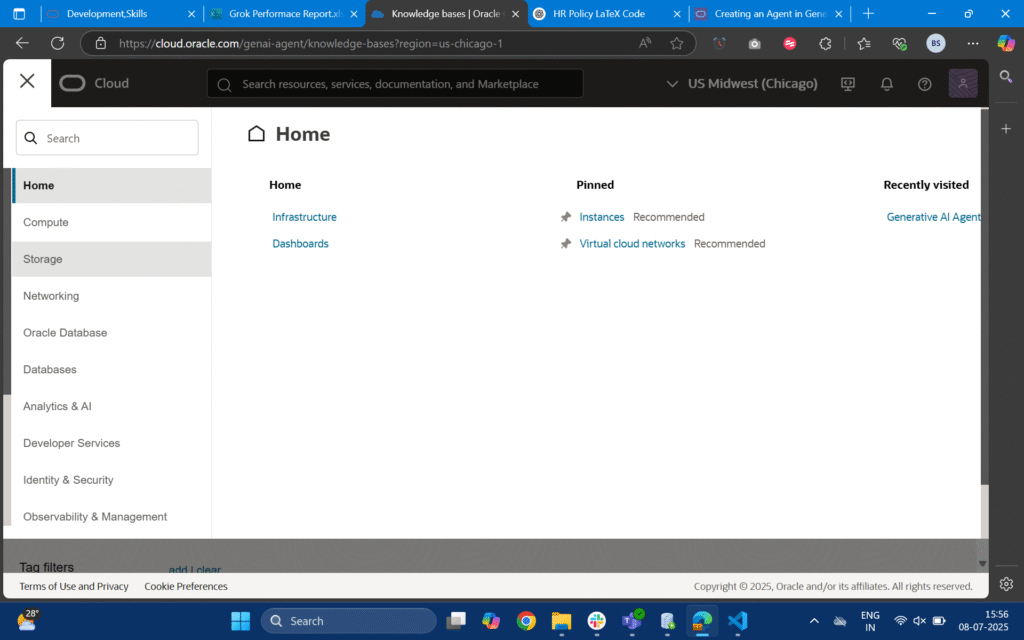

Use Case 1: MCP Integration with Oracle ATP

This use case focuses on enabling natural-language interaction with enterprise data stored in Oracle Autonomous Transaction Processing (ATP) using an MCP-based architecture.

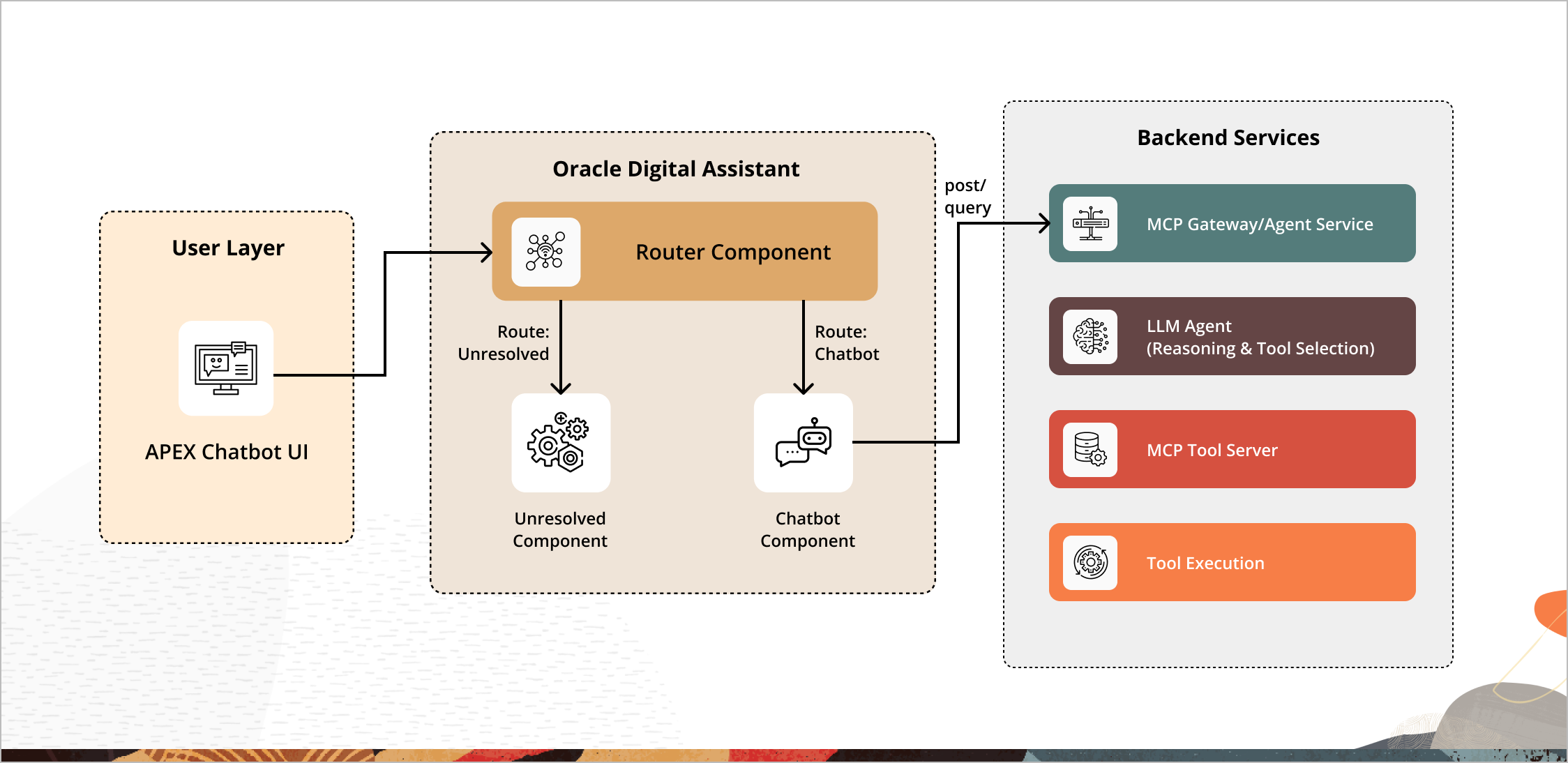

The solution integrates Oracle APEX, Oracle Digital Assistant (ODA), a Fast API-based MCP server, and an AI agent powered by Anthropic Claude. Each layer in the architecture has a clearly defined responsibility.

High-Level Architecture Overview

At a high level, the architecture follows a consistent flow:

- User interacts with chatbot interface.

2. Requests are routed and classified by an orchestration layer.

3. AI agent reasons over the request and invokes MCP tools as required.

4. Backend systems execute the requested operations.

5. Results are returned, formatted, and rendered back to the user

End-to-End Request Flow

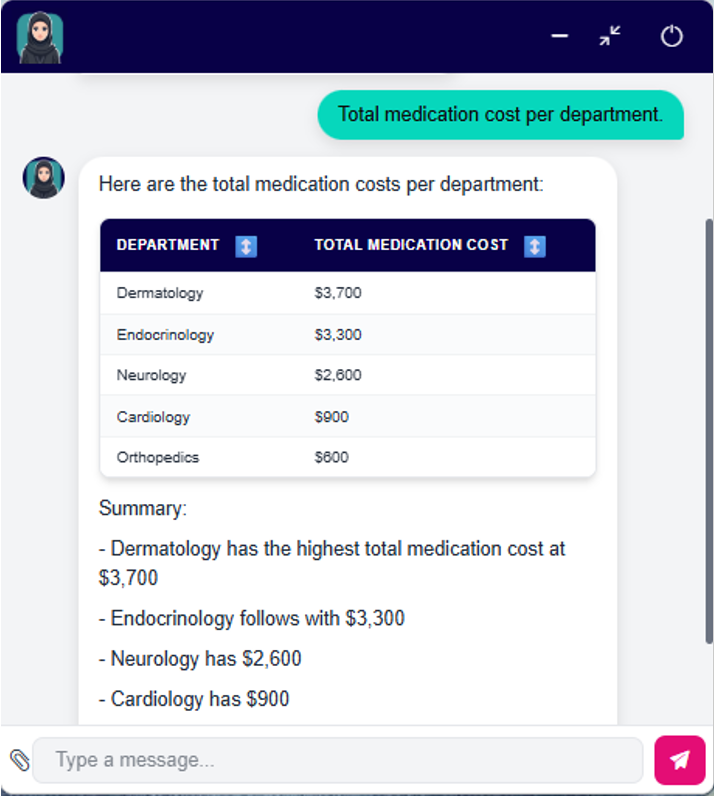

1. User Interaction (APEX Chatbot UI)

Users submit queries through an Oracle APEX–based chatbot interface. The UI layer is responsible only for collecting user input and rendering responses. It does not contain any business logic or database connectivity.”

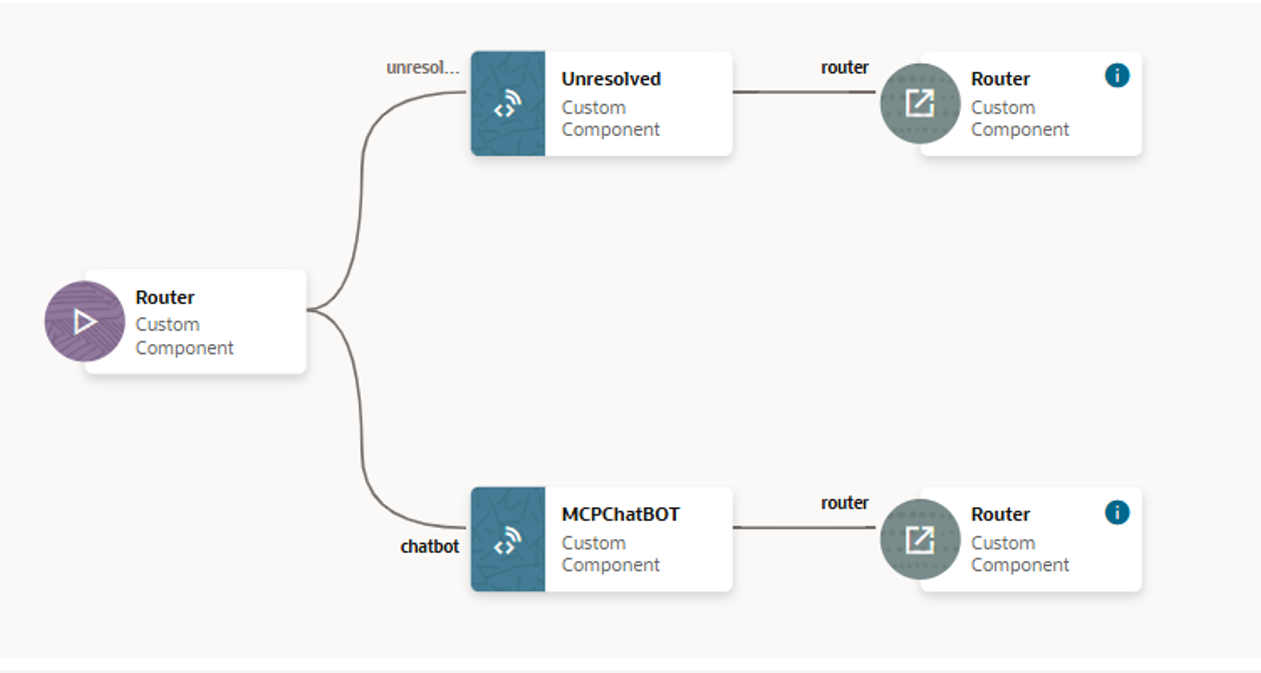

2. Oracle Digital Assistant (ODA) – Routing and Orchestration

All user messages are first handled by Oracle Digital Assistant (ODA). Within ODA, a Router Component analyzes each incoming message and determines whether the query is database-related or should be handled by a general conversational flow.

Database-related queries are routed to the Chatbot Component, which is responsible for invoking the backend MCP service. Non-database queries are handled separately.

ODA Flow

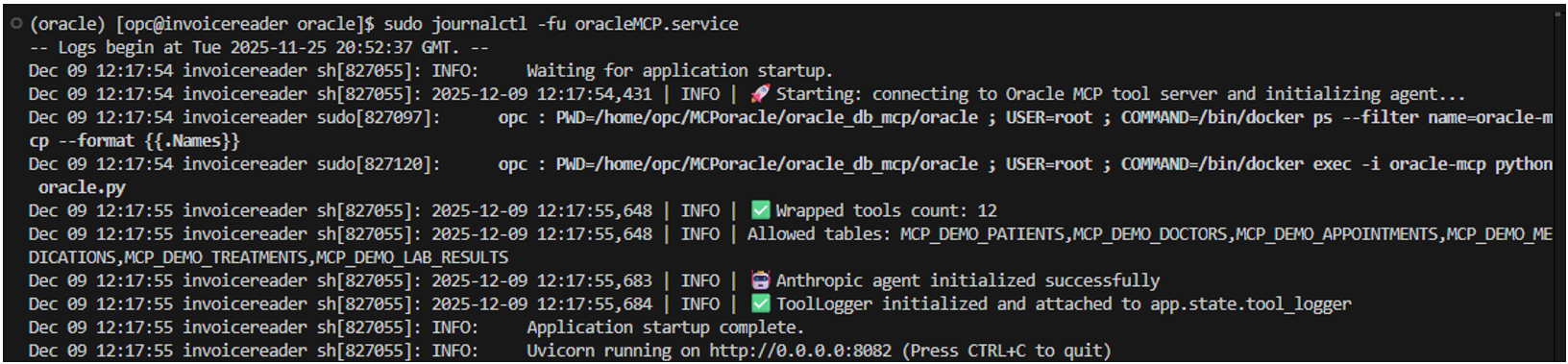

3.FastAPI-based MCP Integration Service

The Chatbot Component sends database-related queries to a FastAPI-based MCP server hosted on a virtual machine. This server acts as the integration gateway between conversational systems and backend execution.Key responsibilities of the FastAPI MCP server include:

- Initializing the AI agent during application startup

- Managing the lifecycle of MCP tool connections

- Exposing a REST endpoint to accept user queries

- Forwarding requests to the agent for reasoning and execution

tools = await mcp_client.get_tools()# Adapt tools for use by the agent framework

agent_tools = adapt_tools(tools)

4. Agent Initialization and Reasoning

The AI agent is created using LangChain and Anthropic Claude. The agent is configured with a predefined set of MCP tools representing allowed database operations.

A simplified example of agent initialization is shown below:

from langchain.agents import create_agentllm = ChatAnthropic(model=’claude-sonnet-4.5′)

agent = create_agent(llm, tools)

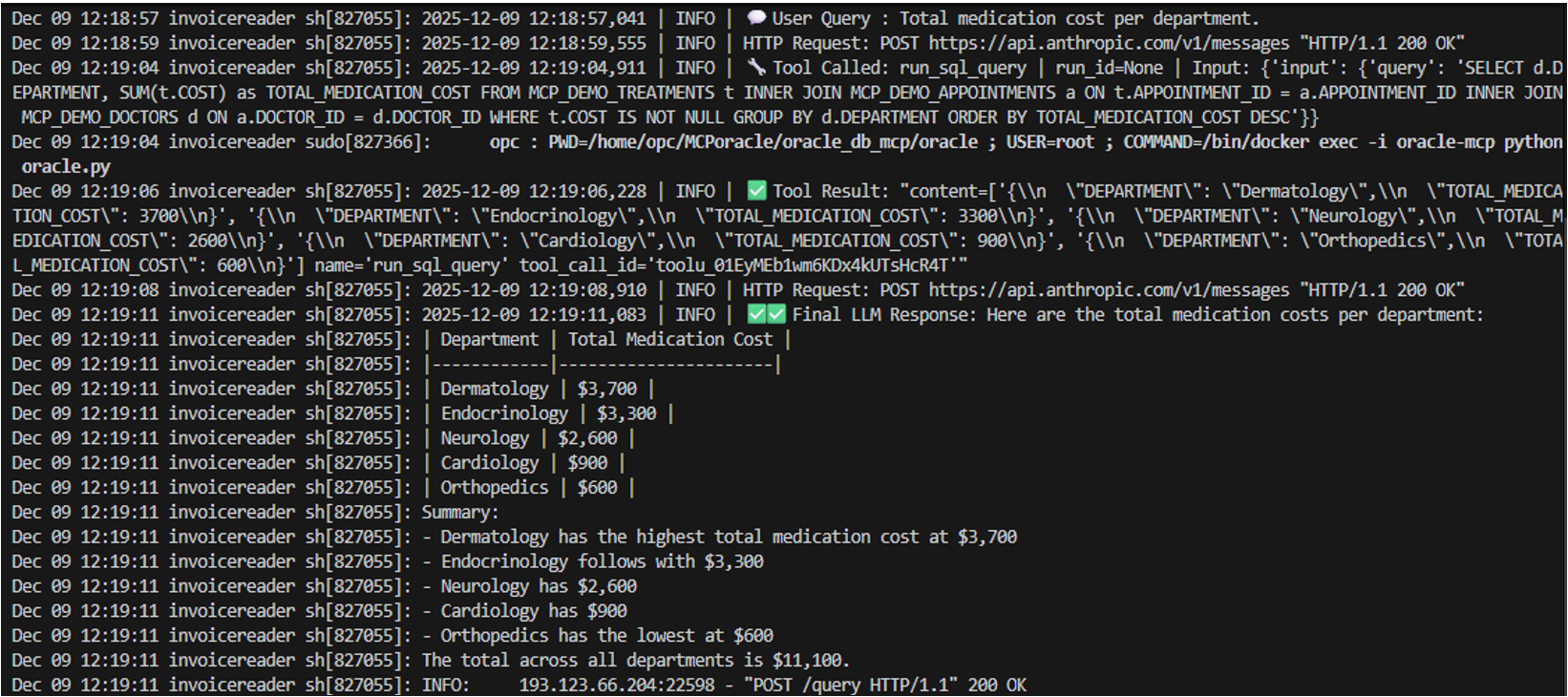

Once initialized, the agent interprets user queries, determines which MCP tools are required, and invokes those tools with structured inputs. The agent does not have direct access to the database and can operate only through the exposed tools.

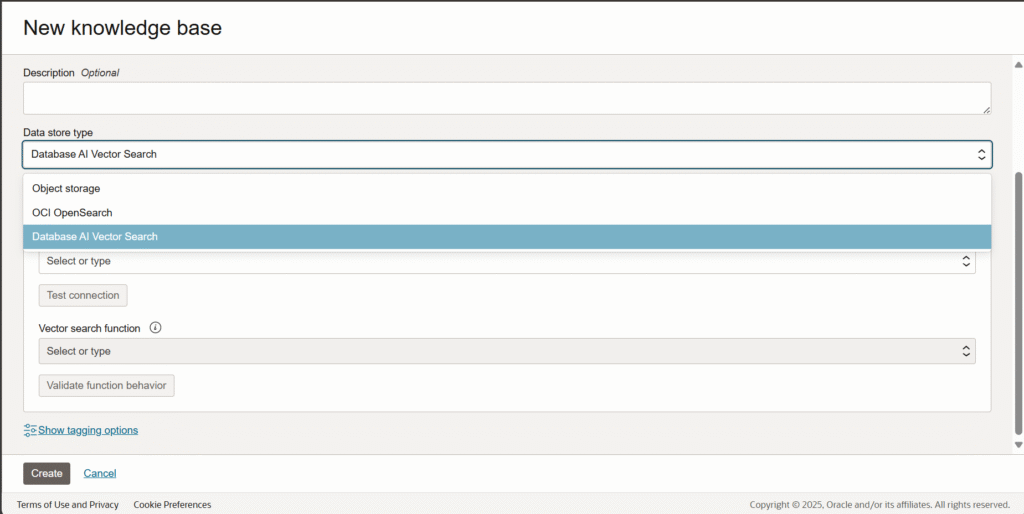

5. MCP Tool Server – Controlled Execution

The Oracle MCP Tool Server exposes database capabilities as explicit MCP tools, such as:

– Schema and metadata discovery

– Column and table inspection

– SQL query execution

Each tool validates inputs and executes operations using server-side logic. This ensures that all database interactions follow predefined rules and constraints.

6. Oracle Autonomous Transaction Processing (ATP)

All approved database operations are executed against Oracle Autonomous Transaction Processing (ATP). The database is accessed only through the MCP tool server, ensuring a clean separation between agent reasoning and data execution.

7. Observability and Tool Logging

Tool invocations are logged during runtime, capturing which MCP tools are used and in what sequence. This provides visibility into agent behavior and helps with troubleshooting and operational monitoring.

class ToolLogger(AsyncCallbackHandler):

async def on_tool_start(self, serialized, **kwargs):

logger.info(“🔧 Tool Called: %s”, serialized)

async def on_tool_end(self, output=None, **kwargs):

logger.info(“✅ Tool Result: %s”, output)

# Invoke the agent to reason over the user query and call MCP tools as needed

response = agent.ainvoke({

“messages”: [

{“role”: “user”, “content”: user_query}

]

})

8. Response Formatting and UI Rendering

After query execution, results are returned to the agent in a structured format. The agent formats the output into a readable representation, which is then sent back through ODA to the APEX chatbot UI for rendering.

Use Case 2: MCP Integration with Cato Networks

Modern enterprise networks generate a large volume of operational and performance data, including latency, packet loss, throughput, and interface-level statistics. While this data is available through network management platforms, extracting meaningful insights often requires specialized tools or manual analysis.

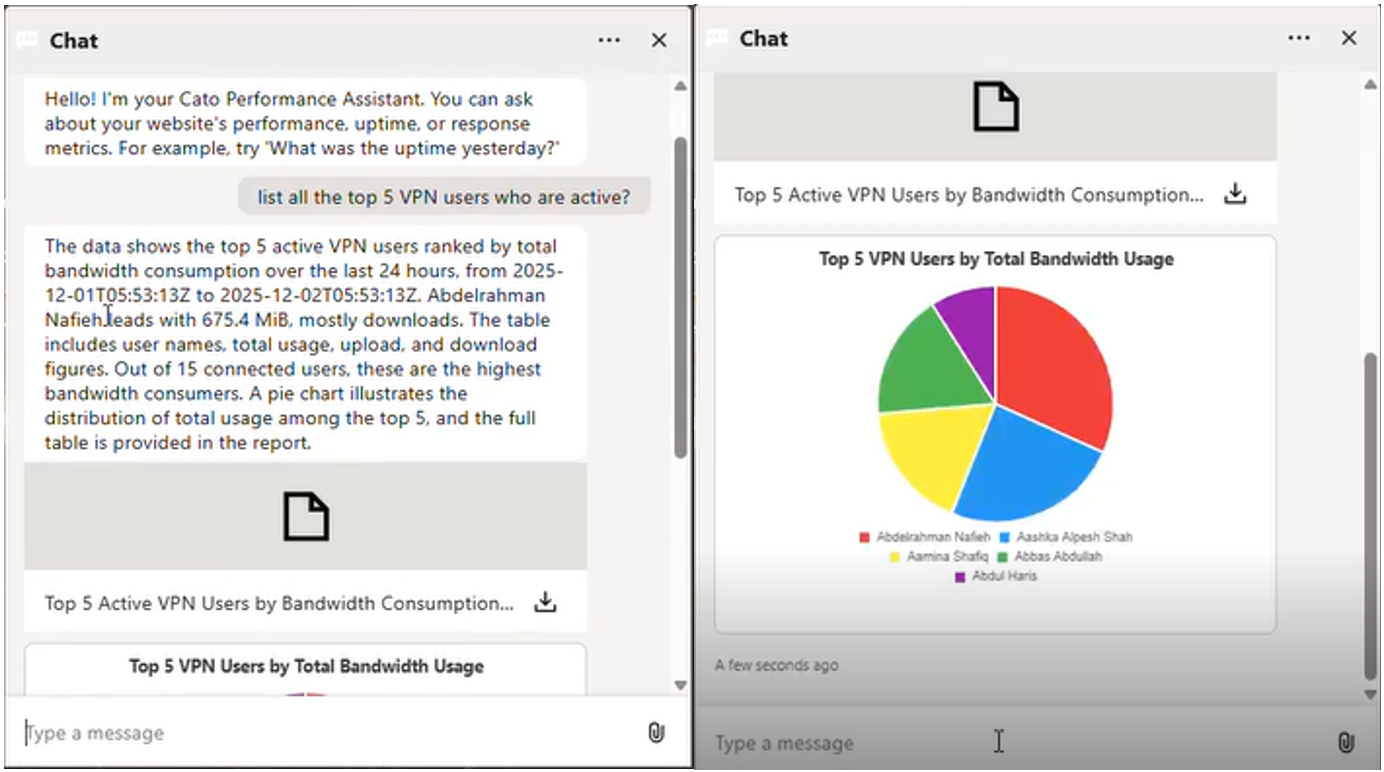

In this case, MCP is applied to enable conversational access to network and operational metrics provided by Cato Networks. The objective is to allow users to ask analytical, multi-step questions in natural language and receive concise, actionable answers.

End-to-End Request Flow

1. User Interaction

Users submit queries through a chatbot interface. The UI layer is responsible only for collecting user input and rendering responses.

2. Oracle Digital Assistant (ODA) Routing

The user message is first processed by Oracle Digital Assistant (ODA). A Router Component analyzes the query and classifies it as a network or operational request.

3. Chatbot Component Invocation

Once identified as a network-related query, the request is routed to the Chatbot Component, which forwards it to the backend service hosting the AI agent.

4. Agent Invocation

The AI agent interprets the user’s question and determines what information is required.

This may include identifying relevant metrics, time windows, aggregation logic, and thresholds.

5. MCP Tool Invocation via Cato Networks MCP Server

Instead of executing operations directly, the agent invokes tools exposed by Cato Networks’ MCP server. These tools provide controlled access to network performance and operational data.

6. Data Retrieval and Aggregation

The Cato MCP server retrieves the requested metrics from the Cato Networks platform and returns structured results to the agent.

7. Response Generation

The agent reasons over the retrieved data, performs any required aggregation or comparison, and generates a concise, human-readable response.

8. Response Delivery

The final response is sent back through ODA and rendered in the chatbot UI.

This flow ensures that network data is accessed only through approved MCP tools while allowing the agent to perform complex reasoning.

Role of MCP in the Cato Networks Integration

In this use case, MCP acts as a standardized integration contract between the AI agent and the network platform.

Key characteristics of this integration include:

- The MCP server is provided by Cato Networks

- The agent consumes MCP tools rather than interacting with proprietary APIs directly

- All network data access is encapsulated behind tool interfaces

- The agent focuses solely on reasoning and decision-making

This approach allows the same agent architecture to be reused across domains, while delegating execution and data access to platform-owned MCP servers.

Analytical Query Examples

The integration supports analytical and multi-step questions that go beyond simple lookups. Examples include:

Q: What was the average RTT for each site yesterday, and which sites exceeded 150 ms?

A: The agent retrieves latency metrics, computes averages per site, applies threshold logic, and highlights outliers

Q: Which WAN interface experienced the highest upstream packet-loss percentage in the past 24 hours?

A: The agent compares packet-loss metrics across interfaces and identifies the worst-performing link.

Q: What is the combined downstream throughput for all interfaces during business hours this month?

A: The agent aggregates throughput metrics across interfaces and applies time-based filtering before generating the response.

These examples demonstrate how MCP enables the agent to reason over structured network data and deliver insights in a conversational format.

Conclusion

The Model Context Protocol provides a robust architectural foundation for integrating AI agents with enterprise systems in a controlled and predictable manner. By adopting MCP, organizations can enable AI-driven interactions while preserving a clear separation between agent reasoning and system execution.

The use cases discussed in this blog illustrate how the same MCP-based design can be applied across diverse enterprise domains from transactional databases such as Oracle ATP to network and operational platforms like Cato Networks. By standardizing how agents interact with external systems through explicit tools, MCP enables scalable, reusable, and governed AI architectures that can be extended as enterprise needs evolve.